The Rise of Adaptive AI

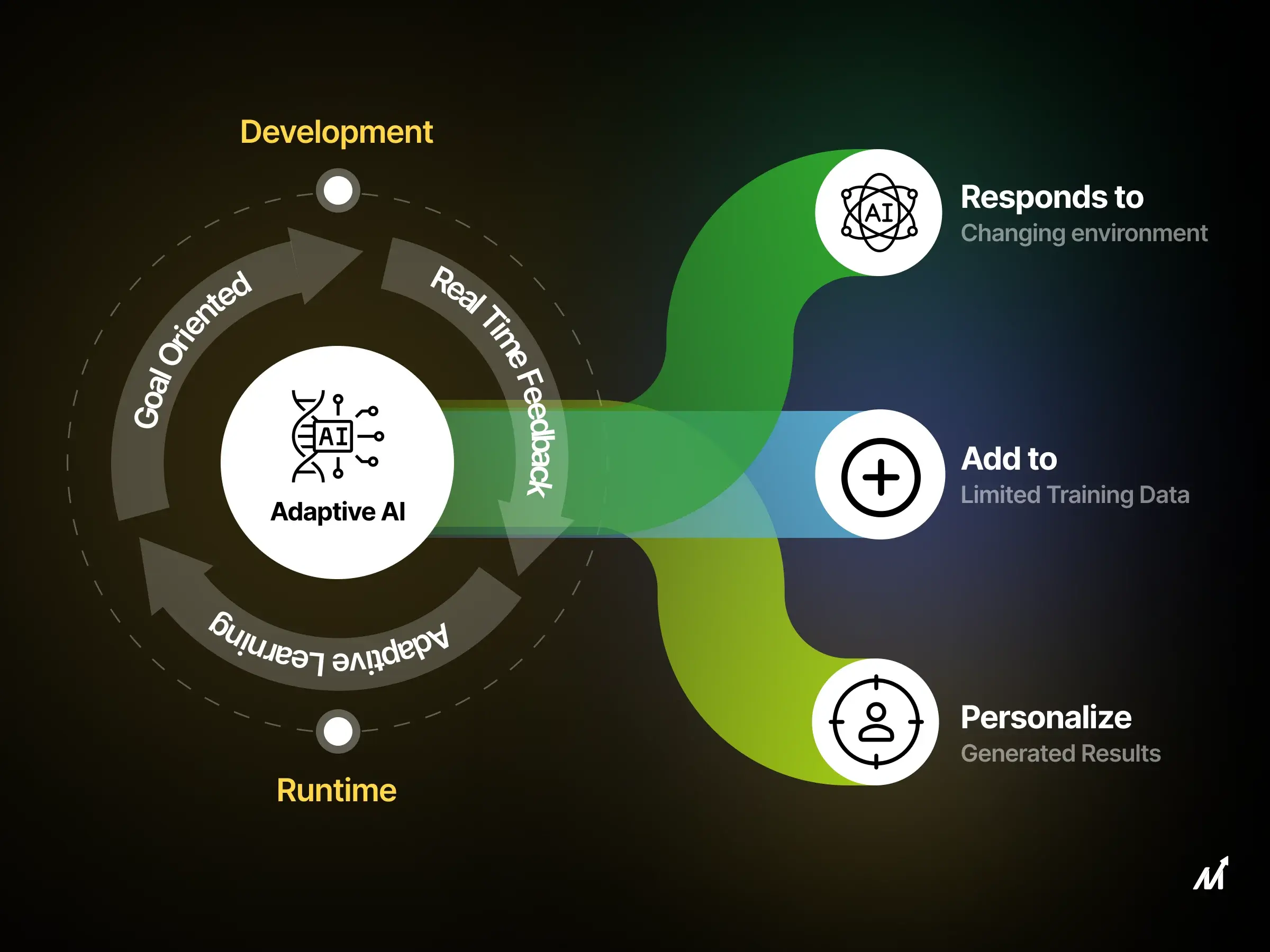

In the ever-evolving sphere of artificial and machine intelligence, a new paradigm is taking shape—Adaptive AI. This cutting-edge approach goes beyond mere algorithmic processes, stepping into the realm of cognitive adaptability. Adaptive AI systems are distinguished by their self-improving architecture, which dynamically refines its decision-making algorithms without requiring human oversight for adjustments.

For Adaptive AI, the continual ingestion of data serves as the lifeblood, enabling real-time updates to decision matrices. These self-evolving algorithms can interpret and integrate newly acquired data, effecting automatic code modifications to incorporate newfound knowledge.

This advent of self-correcting artificial intelligence algorithms positions enterprises to navigate intricate operational quandaries with unparalleled precision and efficiency. Adaptive AI has a transformative impact on business problem-solving. It can analyze large sets of financial transactions to identify fraud. In logistics, it optimizes complex supply chain variables for smoother operations. In healthcare, it sifts through massive datasets to detect emerging patterns in patient symptoms.

What is Adaptive Artificial Intelligence?

Adaptive Artificial Intelligence represents a paradigm shift from traditional, rule-based AI models. Adaptive AI systems are different from static ones. They have complex computational models. These computational models can change their internal algorithms on their own and adjust their decision-making logic making them dynamic. This dynamism gives them exceptional resilience. It also improves their proficiency resulting in quicker and more accurate decisions. In essence, adaptive AI is self-directed, continuously evolving to optimize performance without human intervention.

As we navigate through this article, the subsequent sections will elaborate on the unique features of adaptive AI. We will also explore its practical applications across various industries.

Traditional AI and Adaptive AI

Let’s understand the landscape of Traditional and Adaptive AI an both differs from each other.

Traditional AI: The Current Landscape

Traditional AI systems primarily rely on pre-existing datasets to build a finite knowledge structure. Once these systems undergo their initial training phase, they form heuristic models that guide their future actions. This process results in locking their interpretative capabilities into a set paradigm. While this approach works efficiently for tasks that do not require constant adaptation, its limitations become apparent in environments that are subject to change. The rigid model fails to adapt, potentially becoming obsolete or ineffective over time.

Adaptive AI: The Evolutionary Advance

In sharp contrast to traditional AI, adaptive AI architectures are designed to be fluid and ever-changing. These systems perpetually update their information paradigms, enabling them to adapt to their surrounding environments dynamically. The key difference lies in the system’s ability to evolve its algorithms in real-time, thus ensuring its ongoing relevance. This continuous adaptation allows adaptive AI to optimize solutions for an array of challenges that may emerge, making it an ideal choice for applications that demand real-time intelligence and adaptability.

How is Adaptive AI different from Traditional AI?

|

Criteria |

Traditional Artificial Intelligence |

Adaptive Artificial Intelligence |

|

Environmental Suitability |

Optimal in settings with fixed, predictable parameters |

Thrives in volatile, dynamic landscapes |

|

Learning Trajectory |

Finalizes learning once model is trained, leading to a static knowledge repository |

Learns incessantly, evolving its algorithmic understanding in real-time |

|

Performance Lifecycle |

Subject to obsolescence due to static knowledge; performance may deteriorate |

Self-iterates for enhancement, leading to increasingly robust performance |

|

Scalability Quotient |

Limited flexibility hampers scaling capabilities |

Built for high scalability owing to its adaptive nature |

|

Real-World Example |

Spam Filters in Email Systems |

Autonomous Vehicle Navigation |

Why is Adaptive AI Key for Business Growth?

In the era of accelerated digital transformation, the indispensability of evolving, or adaptive, Artificial Intelligence (AI) in fueling enterprise development cannot be overstated. Adaptive AI is the confluence of agent-oriented architecture and cutting-edge machine learning paradigms like reinforcement learning. This combination facilitates self-adjusting algorithms and operational behaviors to suit dynamic real-world conditions even during live execution. Instances of its practical applications are already evident; the military, for instance, employs adaptive learning platforms that autonomously customize pedagogical content and pacing to optimize individual skill acquisition.

Such flexibility in AI architecture heralds a paradigm shift in enterprise operational models, paving the way for innovative goods, services, and market penetration strategies. Furthermore, this enables the dissolution of operational silos, thus offering novel avenues for organizational synergies.

Here are the Multifold Benefits of Adaptive AI for Businesses

- Augmented Efficiency: By automating repetitive operations, adaptive AI liberates human capital, enhancing organizational productivity.

- Elevated Decisional Accuracy: By offering timely, data-backed insights, adaptive AI minimizes the scope for human fallibility in decision-making processes.

- Tailored User Experiences: AI models, once trained adaptively, discern distinct customer preferences, thereby allowing corporations to deliver bespoke services and merchandise.

- Strategic Edge: Early adoption of this technological innovation empowers firms to outmaneuver market competition in efficiency and ingenuity.

- Amplified Client Gratification: The potential for swift, apt customer interaction by adaptive AI notably escalates consumer contentment and allegiance.

- Fiscal Prudence: The amalgamation of automated operations and high-fidelity decision-making yields substantial cost reductions, reallocating resources for strategic investments.

- Enhanced Risk Mitigation: Adaptive AI’s capabilities extend to data analytics for preemptive identification of potential risks, facilitating proactive remedial actions.

While the promise of adaptive AI is monumental, its incorporation necessitates an overhaul of existing decision-making frameworks to actualize its full potential. This is especially pertinent in light of the imperative for ethical compliance and regulatory adherence, which stakeholders must vigilantly oversee. Let’s now understand the key techniques for Adaptive AI implementation.

Read more about developing Enterprise AI here.

4 Key Techniques of Adaptive AI Implementation

The arena of adaptive artificial Intelligence (AI) encompasses an array of methodologies targeted towards constructing computational models capable of self-improvement. This section will delve into several advanced techniques contributing to adaptive AI’s promise. Reinforcement Learning Paradigms, a method where an autonomous agent learns through interaction. Evolutionary Computation Frameworks, which take cues from natural selection. Granular Computing and Fuzzy Logic Systems, specialized in handling ambiguous data. Other advanced methodologies like Genetic Algorithms, Bayesian Inference Models, and Ensemble Techniques. A deep dive into some of these advanced techniques reveals the following:

1. Reinforcement Learning Paradigms

Reinforcement Learning (RL) is a key subset of machine learning wherein an autonomous agent gains insights to navigate a given environment. RL epitomizes the adaptive nature of AI. It allows an autonomous agent to learn from its interactions within a specific environment, thereby adapting its strategy to optimize a defined objective. Critical elements involve:

-

The state represents the ongoing condition of the environment.

-

The action indicates the agent’s choice.

-

The feedback mechanism could either be a reward or a penalty.

Libraries such as TensorFlow, PyTorch, and Keras-RL are pivotal for executing RL procedures.

2. Evolutionary Computation Frameworks

Inspired by the mechanics of natural evolution, like selection, mutation, and recombination, this approach initiates with a batch of provisional solutions. Evolutionary computation extends the adaptability of AI systems by mimicking the natural evolutionary process. It is initially used to find optimal or near-optimal solutions to complex problems, which are otherwise challenging to solve in a fixed manner. Subsequently, after a rigorous evaluation, the top-performing solutions then proceed to the refinement stage. Consequently, this generates a subsequent layer of improved solutions. This cycle is iteratively executed with the aim of persistent optimization. Libraries like DEAP, PyEvolve, and Optuna are instrumental in applying evolutionary computation techniques.

3. Granular Computing and Fuzzy Logic Systems

Fuzzy Logic enhances Adaptive AI by providing a systematic methodology to handle ambiguities or uncertainties in data. It adapts the decision-making process in accordance with ‘gray areas’, rather than absolutes.Unlike conventional Boolean Logic that operates in absolutes like true/false or 1/0, fuzzy Logic accommodates shades of grey, facilitating sophisticated decision-making. Notable libraries that enable fuzzy logic computations include skfuzzy, fuzzywuzzy, and fuzzy-logic-python.

4. Additional Advanced Methodologies

-

Genetic Algorithms: These algorithms are rooted in the principles of natural selection, enabling systems to adapt by optimizing their ‘genetic’ structures over time.

-

Bayesian Inference Models: Bayesian techniques allow the AI model to update its predictions adaptively based on new data, providing a probabilistic framework to model uncertainty.

-

Ensemble Techniques: By aggregating insights from multiple models, ensemble techniques improve the system’s adaptability, optimizing its overall performance based on diversified input.

How to Implement Adaptive AI?

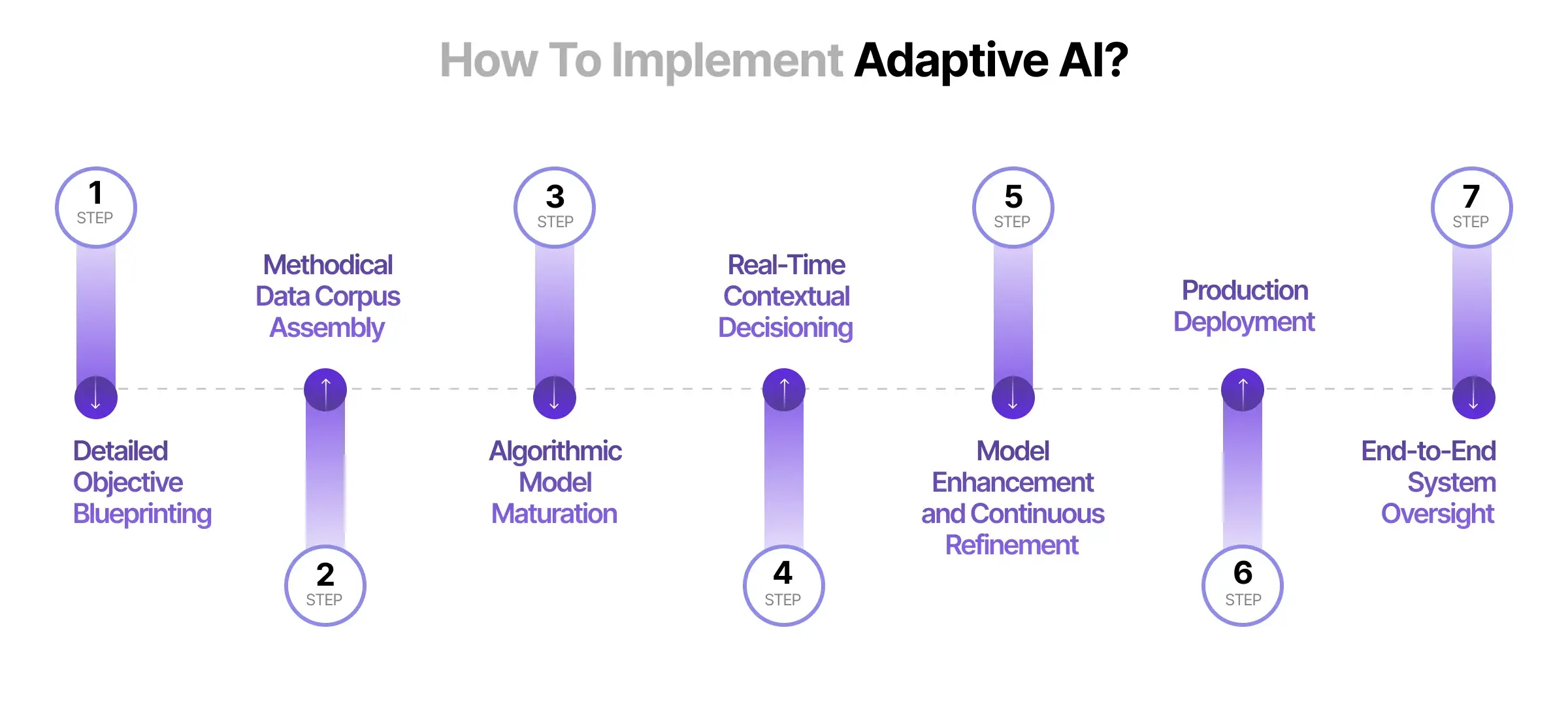

To enact an adaptive AI framework, one must focus on constructing algorithms capable of adjusting operational dynamics in response to environmental shifts and contextual variations. Below are enumerated steps for the effective deployment of adaptive AI systems.

Step 1: Detailed Objective Blueprinting

The initial step in deploying an adaptive AI system with high reliability and efficacy is an exhaustive elucidation of system objectives. The initial step in deploying an adaptive AI system focuses on setting unequivocal goals. This is a multi-faceted endeavor with several critical components.

-

Outcome Precise Identification: The sine qua non of the system’s functionality needs explicit stipulation. It may range from classification paradigms like image or text categorization to more complex undertakings like user behavior predictions, resource allocation strategies, or market analysis. The clearer the outcome parameters, the more streamlined the subsequent phases of development.

-

Performance Metric Engineering: A set of quantifiable metrics becomes mandatory for empirical evaluation after solidifying the desired outcome. Metrics like accuracy, precision, recall, and Area Under the Curve (AUC) provide robust frameworks for performance assessment. These are pivotal in understanding how well the system satisfies the pre-established objectives and where potential adjustments might be warranted.

-

Target Demographic Profiling: Systems do not operate in vacuums. They cater to specific user bases, which demands a deep understanding of demographic intricacies. The data taxonomy, decision trees, and UX/UI of any ancillary software interfaces will depend heavily on who the system aims to serve.

Once the objectives are clearly defined, we are better equipped to focus on data— the raw material for achieving these goals.

Step 2: Methodical Data Corpus Assembly

Data serves as the bedrock on which adaptive AI models are erected. A meticulous approach to data collection can pay dividends in the later stages of model development and deployment.

-

Relevancy Alignment: A precise correlation between gathered data and the predefined objectives ensures that the machine learning models are well-fed with contextually relevant information.

-

Data Diversity Fabrication: The system’s utility is a function of its generalization capacity, which, in turn, is influenced by the diversity of the training data. This extends from regional variations to cultural nuances and even demographic shifts.

-

Temporal Coherence: Data ages and outdated information can significantly hack the system’s adaptability. Consequently, a mechanism for continual data updating is not merely beneficial but often essential.

-

Secure Data Repository: Data custody involves the creation of a centralized, secure, and scalable database that can grow with the system. This is instrumental for subsequent phases, particularly in model training and evaluation.

With a comprehensive and relevant dataset in hand, we can proceed to the algorithmic modeling phase, where this data will be transformed into actionable insights.

Step 3: Algorithmic Model Maturation

This is where the data transforms into actionable Intelligence. Several strategic steps underlie this process:

-

Algorithmic Paradigm Selection: Depending on the nature of the problem, different types of machine learning algorithms—ranging from supervised & unsupervised learning to reinforcement learning paradigms—may be suitable. The selection process should consider compatibility with the data types available and the specificity of the problem being solved.

-

Data Transformation Procedures: Before its utility for model training, the data must undergo several preprocessing steps, including normalization, handling of missing values, and data splitting for validation sets.

-

Hyperparameter Rigorous Optimization: Hyperparameter values like learning rate and regularization parameters determine a machine learning model’s efficiency. Techniques like grid search or random search can be leveraged for this optimization.

-

Performance Benchmarking: Post-training, the model should be subjected to a comprehensive set of tests using a separate validation dataset. This enables an unbiased assessment of the model’s efficacy, informing further tuning and adjustment protocols.

After rigorously optimizing the model’s performance, we prepare it for real-world decision-making capabilities.

Step 4: Real-Time Contextual Decisioning

The potential of adaptive AI systems comes to the forefront in their ability to make real-time decisions based on live data streams.

-

Multi-Sourced Data Integration: Collating data from disparate channels, such as IoT devices, weblogs, and user interactions, creates a comprehensive landscape for decision-making.

-

Data Preprocessing and Transformation: Real-time data is often messy and requires on-the-fly transformation to be usable for decision engines.

-

Predictive Analytics: The primary function here is to generate immediate, contextually relevant decisions that align with the system’s established objectives.

-

Feedback Loop Engineering: This is where adaptivity comes into play. A robust feedback mechanism ensures continual system refinement, tuning the predictive models in real time to enhance future performance.

While real-time decision-making is crucial, adaptability ensures that the model continuously improves its performance, a task accomplished in the next phase.

Step 5: Model Enhancement and Continuous Refinement

Even post-deployment, the model requires frequent updates and adjustments to adapt to evolving data landscapes and user needs.

-

Hyperparameter Tuning Redux: Even after initial calibration, hyperparameters may need periodic adjustments to align with changing conditions or objectives.

-

Feature Engineering includes techniques like principal component analysis or feature extraction to improve the model’s predictive power.

-

Model Retraining and Retuning: Retraining the model becomes essential for maintaining system efficacy and responsiveness as fresh data gets ingested.

As the model is fine-tuned, it must eventually transition from a controlled environment to a production setting, prepared to operate at scale.

Step 6: Production Deployment

Transitioning the model from a sandbox environment to real-world applicability involves multiple facets:

-

Operational Readiness: This involves codebase conversion to machine-friendly formats like TensorFlow’s SavedModel or PyTorch’s TorchScript.

-

Infrastructure Provisioning: Building the requisite computational environment, either on-premise or cloud-based, sets the stage for model hosting.

-

Lifecycle Management: Post-deployment, diligent monitoring, and periodic updates ensure the model remains functional and accessible.

Once deployed, the model isn’t set in stone; it requires continuous oversight to maintain its effectiveness and adapt to new conditions.

Step 7: End-to-End System Oversight

To ensure longevity and efficacy, ongoing monitoring mechanisms are integral. Key operations include:

-

Performance Telemetry: Consistent scrutiny of model reliability and overall performance is non-negotiable.

-

Data Ingestion and Assay: Periodic data feeding enables model recalibration and offers insights into system health.

-

Model Reiteration: With evolving data landscapes, periodic retraining or algorithmic pivoting may be necessitated.

-

Component Augmentation: System updates in software or hardware may be imperative for functional sustenance and adaptive evolution.

To sustain long-term efficiency, ongoing monitoring mechanisms must be integrated into the system’s architecture. By rigorously adhering to these steps, one ensures the construction of an adaptive AI system that aligns with predefined objectives and is versatile, robust, and amenable to ongoing refinement.

Loved this? Read about how to build AI systems too.

Navigating the Intricacies of Adaptive AI Deployment: Best Practices

Adopting Adaptive Ai is no easy feat. This section outlines key factors to consider for effectively deploying and maintaining adaptive AI systems.

1. Problem Complexity and Algorithmic Focus

Understanding the intricacies of the issue at hand is imperative for precisely determining the relevant datasets and algorithmic procedures that an adaptive AI architecture should adopt. This involves meticulously specifying performance indicators and establishing objectives congruent with the SMART (Specific, Measurable, Achievable, Relevant, Time-bound) framework. This paradigm provides an unequivocal path for the development team, ensuring optimal resource utilization and facilitating necessary recalibrations in system progression.

2. Data Integrity in Adaptive Learning

High-caliber, error-free data is the bedrock of a resilient, adaptive AI system. Poor data quality impairs the model’s generalization ability, consequently diminishing performance. Additionally, ensuring data diversity is not merely a recommendation but a requirement. An adaptive AI architecture thrives on diversity in its learning set to maintain its applicability across varying scenarios, particularly as it continually adapts to an evolving problem domain.

3. Algorithm Selection and Problem Specificity

Algorithms like reinforcement learning and online learning algorithms often dovetail nicely with adaptive systems. Nevertheless, the algorithmic choice is intricately tied to the type of data the system ingests. Online learning algorithms manifest their effectiveness in scenarios involving streaming data. In contrast, reinforcement learning algorithms prove invaluable in domains requiring sequential decision-making.

4. Performance Monitoring for Real-Time Applications

Ongoing monitoring, predicated on metrics intrinsically aligned with set objectives, provides a feedback loop for assessing an adaptive AI system’s efficacy. This becomes crucial for real-time applications of adaptive AI, ensuring that progress is tracked and potential performance bottlenecks are identified proactively. Moreover, continual monitoring facilitates real-time refinements aimed at performance optimization.

5. Addressing Concept Drift

Adaptive AI systems risk performance degradation due to concept drift, a phenomenon where shifts in data distribution over time compromise model effectiveness. Implementing robust detection techniques for these changes is essential. One viable approach incorporates online learning algorithms that constantly learn from and adapt to fresh data streams. Another strategy involves periodic retraining of the model on the most recent data, thereby maintaining its accuracy and relevance.

6. Quality Assurance Through Testing

Comprehensive testing frameworks play a pivotal role in affirming the reliability of adaptive AI systems. Such frameworks should encompass multiple testing paradigms like unit, integration, and performance testing. While unit tests isolate individual components for functionality verification, integration tests scrutinize the interplay between these components. On the other hand, performance tests gauge the system’s operational efficiency and scalability. Implementing a varied dataset for testing ensures that the system’s performance is validated under a spectrum of conditions, facilitating the identification of areas requiring refinement.

7. Ethical and Fair Practices

Fairness metrics and continual monitoring are vital to mitigating biases in adaptive AI systems. These metrics quantify the degree to which a system treats diverse groups equitably, aiding in identifying any underlying bias or discriminatory behavior. The training data and algorithms must undergo regular assessments to filter out biases and apply bias-reducing algorithms when required. Transparency in operational policies is vital for stakeholder comprehension and system accountability.

8. Explainable AI Techniques

The inherently dynamic nature of adaptive AI systems poses challenges in interpreting their operational Logic, often leading to stakeholder mistrust. Explainable AI (XAI) methods bridge this gap by delivering transparent and understandable explanations for the system’s decision-making processes. Employing such techniques fortifies stakeholder trust and augments system accountability, as its decisions can be audited for fairness and ethical considerations.

9. Security Protocols

The ubiquity of AI systems amplifies their vulnerability to security threats from adversarial actors. Measures like security audits, penetration testing, and various other security evaluations fortify an adaptive AI system’s defense mechanisms. This ensures the system’s robustness, preserving its integrity against unauthorized intrusions that could manipulate its input or compromise stored sensitive data.

By meticulously adhering to these guidelines, adaptive AI systems can be built and maintained with the highest degree of efficacy, reliability, and ethical soundness, thereby revolutionizing their applications across diverse sectors.

What Does Gartner say about Adaptive AI Digital Work Trends?

Adaptive AI is revolutionary in healthcare, education, and cybersecurity. Gartner, a leading research firm, highlights how this Adaptive AI is making a difference. Let’s dive into what they say about the latest trends across these sectors.

1. Medical Sector

The United States Food and Drug Administration is slated to inaugurate an accreditation framework specifically designed for Artificial Intelligence-based tools within healthcare settings. The expectation is to comprehensively disseminate these AI platforms across medical facilities in the nation.

2. Digital Education

Academic institutions and educational providers increasingly deploy AI-driven algorithms to customize curriculum and learning resources. The goal is to dynamically adjust to individual student performance and learning velocity. The anticipation is that such tailoring will elevate overall educational outcomes, including high school graduation rates, collegiate achievements, and credential acquisitions.

3. Governance of Trust and Cybersecurity

Artificial Intelligence can continuously self-evolve, rendering it particularly effective in scrutinizing subtle changes in online user behaviors that may go unnoticed by human analysts. Consequently, AI surpasses human capability in identifying vulnerabilities, preserving critical identities and enterprise applications, detecting cybersecurity risks, executing timely countermeasures, and instituting recovery mechanisms.

Adaptive AI Pioneers: Illustrative Cases for Adaptive AI’s Scope

From optimizing analytics in the chemical industry to fine-tuning surveillance technologies, adaptive AI is proving to be transformative. Let’s dive into some illustrative cases that showcase the vast scope of adaptive AI’s capabilities.

1. Dow Chemicals: Optimizing Analytics with Adaptive AI

Dow Chemicals, a U.S.-based multinational in the chemical and materials sector, employs adaptive artificial intelligence systems that augment the capabilities of its enterprise analytics framework. These systems intelligently process real-time data relating to usage patterns and value-centric parameters, thereby dramatically enhancing the efficacy of their analytics initiatives. This led to an astronomical 320% augmentation in the value yielded from their analytics infrastructure.

2. Cerego: Adaptive Learning for Individualized Education

Cerego, an AI-infused instructional platform commissioned by the U.S. military, employs adaptive learning algorithms. These algorithms are engineered to diagnose pedagogical content, calibrate measurement metrics, and optimally schedule assessment intervals. They dynamically alter their teaching modalities to dovetail with the individualized learning trajectories of each user.

3. The Danish Safety Technology Authority: Transforming Surveillance through AI

The Danish Safety Technology Authority (DSTA) has transcended conventional limitations by deploying an AI-driven surveillance apparatus. This apparatus is fine-tuned to instantly identify products and their respective manufacturing entities, accelerating the safety verification timeline. The Adaptive AI technology is so transformative that it has burgeoned into a separate product suite. And now operational in 19 additional European jurisdictions.

Embracing the potential of adaptive AI with Markovate

In a nutshell,

Adaptive AI

Why It Matters for Businesses

-

Navigates volatile market conditions with agility

-

Enhances decision-making through integrated intelligence

-

Enables real-time, optimized user interactions

Markovate’s Expertise

-

Industry leader in Adaptive AI technology

-

Specialized knowledge for adapting to AI-driven landscapes

-

Helping in adoption of new operational models that aren’t optional, but a necessity

-

Prepare your business for digital transformations to stay competitive

Partner with Markovate

I’m Rajeev Sharma, Co-Founder and CEO of Markovate, an innovative digital product development firm with a focus on AI and Machine Learning. With over a decade in the field, I’ve led key projects for major players like AT&T and IBM, specializing in mobile app development, UX design, and end-to-end product creation. Armed with a Bachelor’s Degree in Computer Science and Scrum Alliance certifications, I continue to drive technological excellence in today’s fast-paced digital landscape.

Discussion about this post