Images emitted by OpenAI’s generative models will include metadata disclosing their origin, which in turn can be used by applications to alert people to the machine-made nature of that content.

Specifically, the Microsoft-championed super lab is, as expected, adopting the Content Credentials specification, which was devised by the Coalition for Content Provenance and Authenticity (C2PA), an industry body backed by Adobe, Arm, Microsoft, Intel, and more.

Content Credentials is pretty simple and specified in full here: it uses standard data formats to store within media files details about who made the material and how. This metadata isn’t directly visible to the user and is cryptographically protected so that any unauthorized changes are obvious.

Applications that support this metadata, when they detect it in a file’s contents, are expected to display a little “cr” logo over the content to indicate there is Content Credentials information present in that file. Clicking on that logo should open up a pop-up containing that information, including any disclosures that the stuff was made by AI.

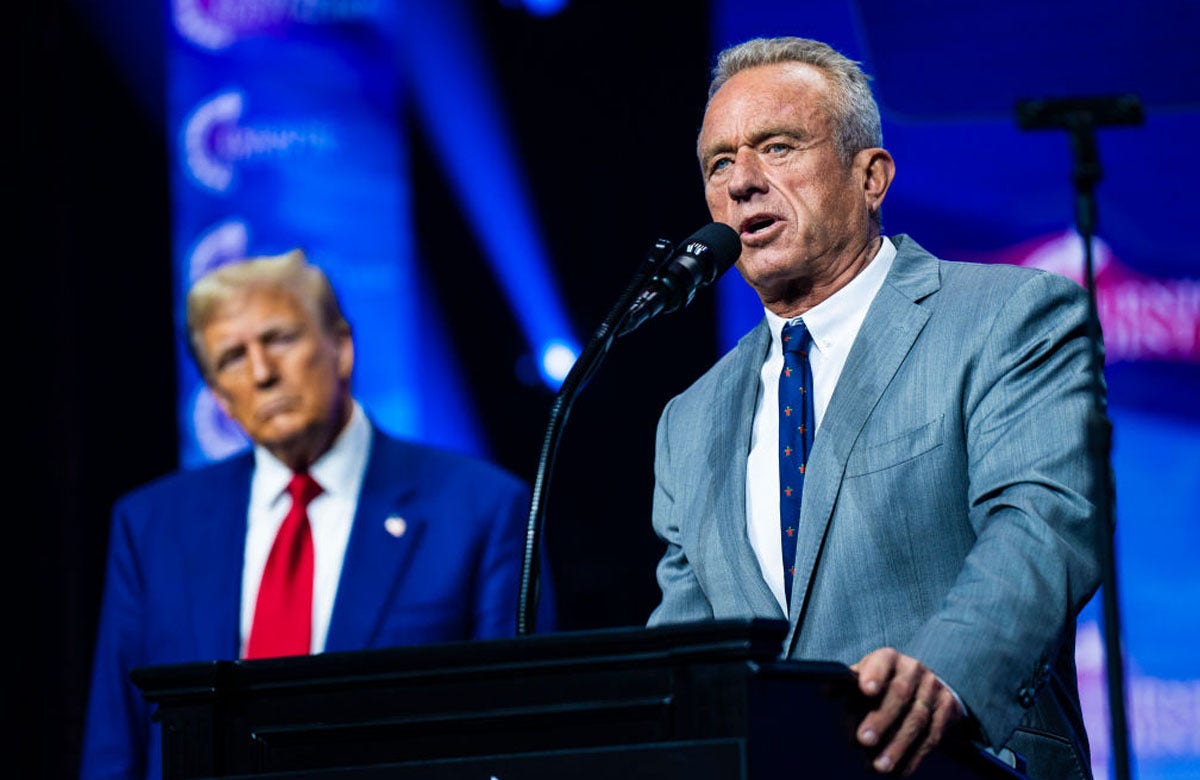

How the C2PA ‘cr’ logo might appear on an OpenAI-generated image in a supporting app. Source: OpenAI

The idea being here that it should be immediately obvious to people viewing or editing stuff in supporting applications – from image editors to web browsers, ideally – whether or not the content on screen is AI made.

Helping people distinguish between real and faked images will no doubt become increasingly important as AI-generated content improves to the point at which it is not obviously the output of a machine. Experts fear that such synthetic media will mislead netizens and be used to sow misinformation or support disinformation campaigns. Tech companies are stepping up efforts to minimize risks as, for one thing, the 2024 US presidential election nears.

Yet the Content Credentials strategy isn’t foolproof as we’ve previously reported. The metadata can be easily stripped out or exported without it, or the “cr” cropped out of screenshots, so no “cr” logo will appear on the material in future in any applications. It also relies on apps and services to support the specification, whether they are creating or displaying media.

To work at scale and gain adoption, it also needs some kind of cloud system that can be used to restore removed metadata, which Adobe happens to be pushing, as well as a marketing campaign to spread brand awareness. Increase its brandwidth, if you will.

OpenAI acknowledged as much this week when announcing this week ChatGPT and DALL-E 3 API will include C2PA’s Content Credentials in their output.

“Metadata like C2PA is not a silver bullet to address issues of provenance,” OpenAI admitted. “It can easily be removed either accidentally or intentionally. For example, most social media platforms today remove metadata from uploaded images, and actions like taking a screenshot can also remove it. Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API.”

In terms of file-size impact, OpenAI insisted that a 3.1MB PNG file generated by its DALL-E API grows by about three percent (or about 90KB) when including the metadata. A 287K WebP file, meanwhile, will jump by about 32 percent with that 90KB payload.

C2PA is gaining traction: as more apps and services pledge to support it, more software can use it, more people should see it, and so it grows. Meta announced it was building tools to support the industry group’s specification this week.

“We believe that adopting these methods for establishing provenance and encouraging users to recognize these signals are key to increasing the trustworthiness of digital information,” OpenAI declared.

We’re told ChatGPT via the web and DALL-E 3 model via its API will now include the C2PA metadata, and mobile users will get it by February 12. ®

Discussion about this post