Introduction

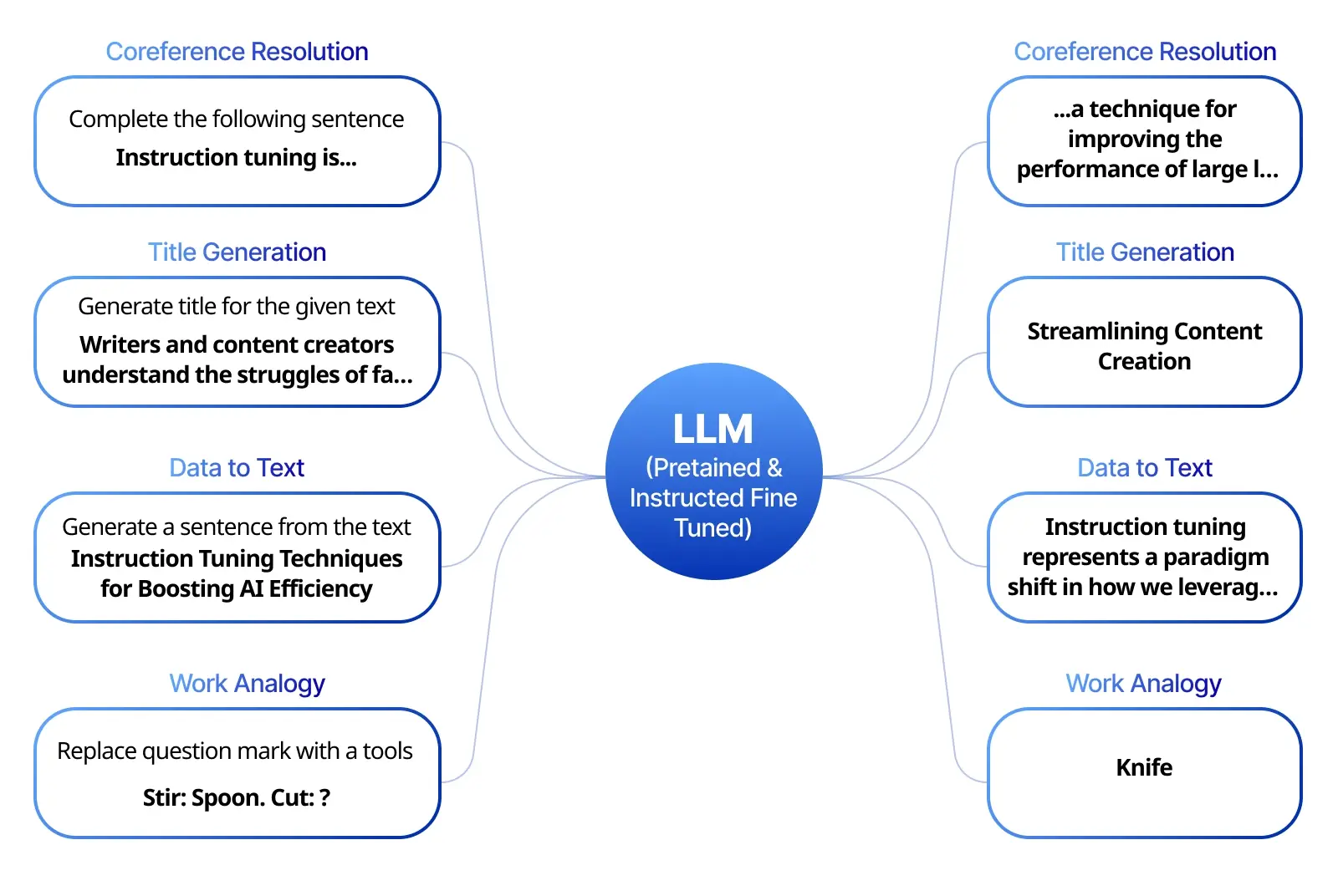

Instruction tuning represents a paradigm shift in how we leverage large language models (LLMs). Instead of relying solely on pre-trained models to generate text, instruction tuning allows us to fine-tune these models, guiding them to be more aligned with specific tasks and user expectations. This empowers LLMs to be more versatile and capable of learning from a variety of prompts, rather than being limited by their training dataset.

Imagine having an assistant who understands your instructions not just grammatically but contextually, providing helpful and accurate responses. This is the power that instruction tuning unlocks in the field of natural language processing. But how does it achieve this, and what implications does it have?

Demystifying Instruction Tuning

Before instruction tuning, fine-tuning pre-trained models involved specializing them in areas like sentiment analysis or question answering. This approach had limitations as the model’s expertise was often confined to the specific tasks it was trained for. It struggled to generalize this knowledge to different, even if related, tasks.

Instruction tuning seeks to overcome this by training LLMs on a dataset of instructions paired with desired outputs. It’s essentially about teaching the model how to learn and adapt from instructions. In essence, instruction tuning bridges the gap between “knowing how” to perform specific tasks and comprehending how to “learn” from new instructions, resulting in greater adaptability and efficacy across diverse applications.

Beyond Just Words: Understanding User Intent

Think of it this way. You ask a friend, “Could you tell me how to bake bread?” versus “Please explain the science of bread making.” Both involve bread, yet the intent differs greatly.

Pre-trained models, especially without fine-tuning, might get caught up in the common theme (bread) and miss the nuanced difference in your instructions. A language model fine-tuned through instruction tuning can differentiate these instructions, providing a recipe in one case and a detailed explanation of yeast activity and gluten development in the other. Instruction tuning empowers the model to discern what the user is truly asking, not simply predicting the next word in a sequence.

A Glimpse into the Mechanics: How It Works

Instruction tuning works by leveraging the power of supervised learning, specifically with a dataset composed of instruction-output pairs. Consider this simple table to visualize the structure:

| Instruction | Desired Output |

| Summarize the main points of the French Revolution. | The French Revolution, a period of radical social and political upheaval in France and Europe, was marked by events such as the storming of the Bastille, the Reign of Terror, and the rise of Napoleon. |

| Translate “Hello world” into Spanish. | Hola mundo. |

This structured approach enables the model to learn a broader range of language tasks and apply that understanding when encountering instructions it hasn’t seen before.

Researchers discovered that incorporating more tasks into the instruction tuning datasets consistently improves the model’s ability to respond accurately to instructions, as Google AI highlighted in their work, “Fine-tuned Language Models are Zero-Shot Learners.”

This indicated that rather than simply becoming adept at specific tasks, the models were actually improving their ability to decipher instructions in a more generalized way. Think of it as moving beyond rote memorization to genuine understanding.

Key Benefits of Instruction Tuning

The advantages of instruction tuning go beyond simply fine-tuning a model for a handful of specific tasks. It is a step towards a more robust and multi-faceted language model. This translates into tangible benefits, both in research and application:

- Improved Zero-Shot Performance: Instruction-tuned models show a remarkable ability to generalize well. As the name suggests, this refers to their capability to understand and respond correctly to entirely new instructions without prior explicit training on those particular tasks. It’s like teaching someone the concept of cooking; they might not have made a specific dish before, but the foundational understanding allows them to tackle the recipe successfully.

- Increased Efficiency: From a development standpoint, instruction tuning saves considerable time, computational resources, and training data compared to the daunting task of pre-training large models from the ground up. It also reduces our reliance on prompt engineering. With traditional fine-tuning, you might need intricate and very specific prompts to elicit the desired outputs.

- Enhanced User Experience: By providing instructions, we shift from cryptic prompts to more natural language interactions. Users experience greater accuracy in task completion. There is also a decreased reliance on painstakingly engineered prompts because the model is better equipped to understand natural language instructions.

Real-world Applications of Instruction Tuning

Moving beyond the theoretical, let’s explore some practical applications of instruction tuning:

Elevating Chatbots and Virtual Assistants

Imagine conversing with customer service chatbots or virtual assistants like Siri and Alexa that not only understand but also accurately follow through on multi-step directions, answer your questions in a comprehensive and helpful manner, and adapt to different conversational styles.

Instead of receiving generic, pre-programmed answers, you engage in truly interactive and efficient conversations. Instruction tuning is paving the way for a future where interacting with AI is as natural and intuitive as interacting with another human being. This shift represents a monumental leap in making AI more approachable, versatile, and integral to our everyday lives. It brings us one step closer to truly conversational AI – bots and virtual assistants that feel less like machines and more like helpful, intelligent companions.

Powering Educational Tools and Platforms

Instruction tuning is transforming educational platforms. Imagine intelligent tutoring systems that provide personalized instructions, detailed explanations, and even different types of assessments, all based on a student’s learning pace and style. The capability of models to translate languages effectively is paramount in our globalized world.

A notable example comes from Google AI’s research, “Fine-tuned Language Models are Zero-Shot Learners”. This groundbreaking study underscores that it empowers language models to achieve higher accuracy in language translation, particularly for intricate tasks that often trip up traditional translation systems. Whether breaking down complex topics into easily digestible formats, adapting exercises to match student skill levels, or even offering tailored feedback, instruction-tuned models hold immense potential for revolutionizing personalized education. It empowers educators and opens up exciting opportunities for delivering customized, effective learning experiences. This personalized approach makes learning more engaging and allows students to grasp concepts more readily.

Streamlining Content Creation

Writers and content creators understand the struggles of facing a blank page or hitting a creative roadblock. Instruction-tuned models can assist in several ways. By providing specific instructions and context, these models can generate various creative text formats, from poems and scripts to articles and social media posts, simplifying the writing process for individuals and businesses alike.

Think of tasks like drafting emails, writing different kinds of creative content, or even translating languages with better accuracy. These can range from summarizing lengthy documents to crafting creative content in diverse writing styles—think poems, scripts, or even musical pieces. It becomes possible to produce high-quality text tailored to specific purposes. This can streamline workflows and allow creators to focus on refining the output further, ultimately leading to higher-quality content creation overall.

As with all technological advancements, challenges come with these exciting possibilities. Building high-quality and comprehensive instruction datasets is resource-intensive and time-consuming. Also, potential bias that may exist within the data on which these models are trained must be carefully considered.

How Markovate Can Help with Instruction Tuning

At Markovate, we specialize in optimizing AI models through instruction tuning, ensuring they meet our clients’ unique needs. Our process begins with meticulous dataset curation and preparation. We then clean and preprocess this data to ensure it’s well-labeled and formatted correctly, laying a solid foundation for effective training.

Leveraging our expertise, we help select the most suitable pre-trained model and, if necessary, develop custom model architectures tailored to your requirements. Our implementation of instruction tuning techniques includes supervised fine-tuning with labeled datasets, reinforcement learning strategies for continuous improvement, and transfer learning to adapt pre-trained models with minimal data.

To ensure the model’s accuracy and effectiveness, we define key performance indicators (KPIs) and conduct thorough error analysis, identifying areas for further enhancement. Once the model is fine-tuned, we develop scalable solutions for seamless integration into your existing systems, backed by continuous monitoring and improvement post-deployment.

Markovate’s expert consultation and ongoing technical support are integral to our service, guiding you through best practices and advanced techniques in instruction tuning. Partnering with us ensures your AI models are accurate, reliable, and perfectly aligned with your specific needs.

FAQs about Instruction Tuning

What is Instruction Tuning?

Instruction tuning is a specialized form of fine-tuning employed in machine learning. It involves training large language models (LLMs) on a dataset of instructions paired with their corresponding desired outputs. This approach improves the model’s ability to understand and follow new instructions, leading to more accurate and desired responses.

What is the Difference Between Instruction Tuning and Fine-tuning?

Instruction tuning helps models understand and respond to new instructions, not just adapt pre-trained models for specific tasks. Traditional fine-tuning tailors a model for specific tasks, while instruction tuning teaches it to adapt based on any instructions.

What is the Objective of Instruction Tuning?

Instruction tuning focuses on improving the ability of large language models (LLMs) to follow instructions given in natural language. It’s about bridging the gap between human instructions and how models are historically trained to optimize performance. Essentially, it works by leveraging labeled datasets, similar to how we feed data to train AI models for other tasks.

What is the Difference Between Prompt Engineering and Instruction Tuning?

Instruction tuning aims to reduce the heavy reliance on prompt engineering by directly teaching the model to comprehend instructions. On the other hand, imagine this as providing the LLM with a crash course in understanding human communication patterns. Through this method, the model essentially learns how to interpret and react to our requests better. This empowers LLMs to achieve greater accuracy across diverse tasks without relying heavily on elaborate prompt engineering. This makes the model more versatile, learning from various prompts instead of being limited by its training dataset.

Conclusion

Venturing into AI and unlocking the potential of large language models, instruction tuning will shape human-machine language interaction. It’s about teaching models to understand, assist, and truly engage with us in meaningful and productive ways.

The beauty of instruction tuning lies in its elegance. We’re shifting from treating LLMs as rule-based systems to educating them to better understand us, the users. At this intersection of language and AI, instruction tuning might revolutionize how we interact with and benefit from technology. Despite challenges with quality datasets and bias, instruction tuning signals a shift toward more intuitive and human-centric AI.

Discussion about this post