Camera-enabled devices have become ubiquitous extensions of internet-connected “smart” homes–and so have concerns about unwanted prying eyes. In recent years, hackers have reportedly tapped into Amazon-owned ring cameras to spy on young girls in their bedrooms. Elsewhere, leaked sensitive photos of people in their bathrooms collected by roaming robot vacuum cleaners have managed to find their way onto social media sites. There is a growing public concern about just who can ultimately view this vast supply of sensitive data. That can make accepting cameras into home a tricky compromise for the privacy-conscious.

But a new thermal camera being developed by researchers from the University of Michigan aims to quell some of those privacy concerns by swapping out an individual’s body entirely with a cartoon-like stick figure.

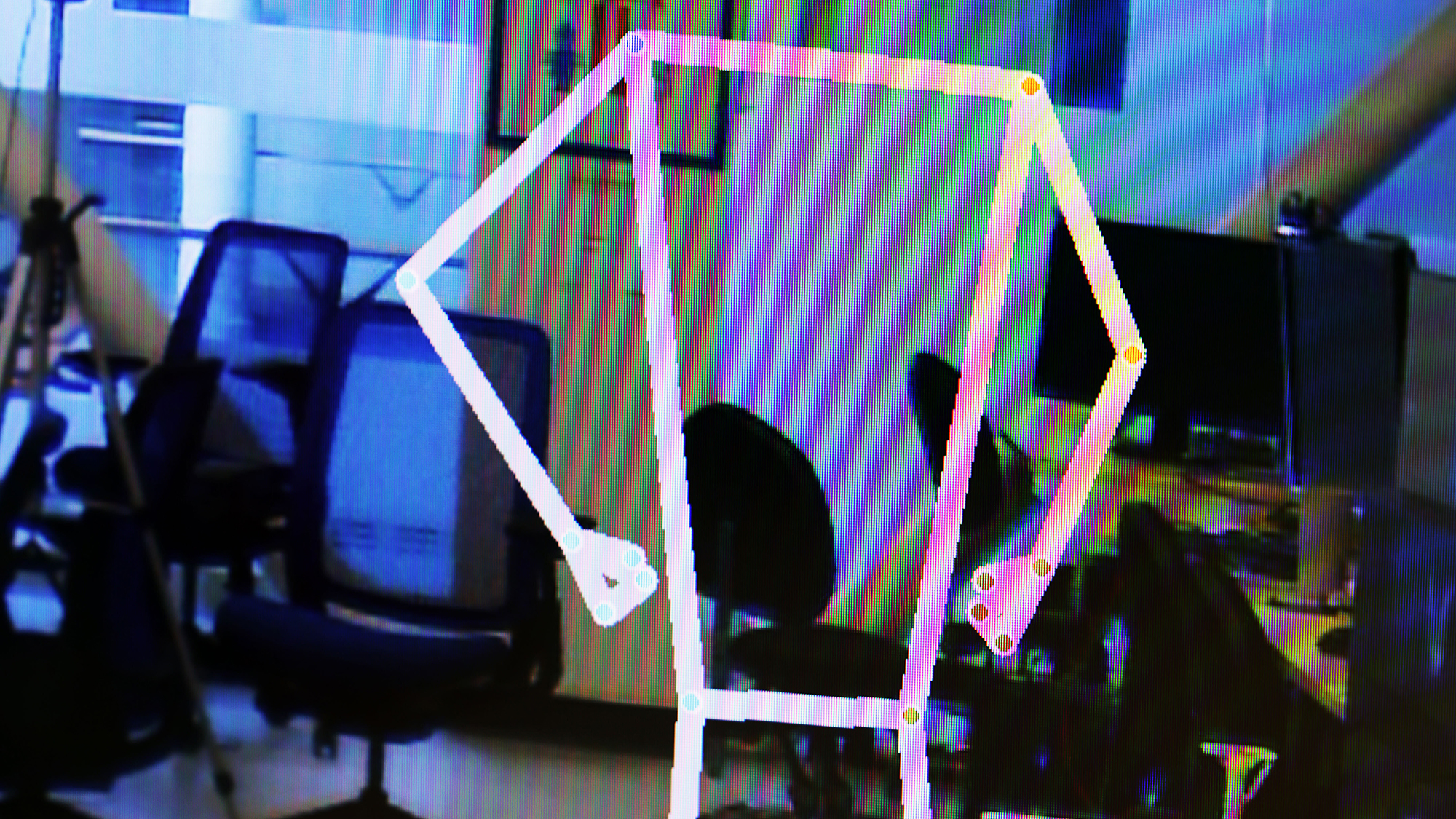

Researchers detailed their new camera technology, called “PrivacyLens,” as a part of a recent study published in the Proceedings on Privacy Enhancing Technologies. The prototype device uses an RGB camera with a thermal imaging system to detect individuals based on their heat signatures. An onboard GPU is then used to “subtract” the person’s full body from the image, leaving only a silhouette in the form of a stick figure outlining their body remaining in the frame. The model can follow an individual’s movements to create a composite image that fills in the gaps where the person’s features once were. All of the detection and computing happens in real time, which means the individual’s face and other personally identifiable features aren’t stored on the monitoring device or sent off-device to a remote cloud server.

University of Michigan Associate professor of computer sciences and engineering and study coauthor Alanson Sample said that he believes cameras equipped with PrivacyLens would “be a far safer product than what we currently have.”

“Most consumers do not think about what happens to the data collected by their favorite smart home devices,” Sample said. “In most cases, raw audio, images and videos are being streamed off these devices to the manufacturers’ cloud-based servers, regardless of whether or not the data is actually needed for the end application.”

PrivacyLens, by contrast, is intended to remove instances of faces, hair color, skin color, gender estimation, and body shape, four key physical characteristics often used to identify people. Researchers tested PrivacyLens in three different environments—an office, a home living room, and an outdoor park—and found it achieved a privacy sanitization rate of 99.1%. Faces and body shape had the highest success rates, while skin color was removed at 97.4%, 98.5%, and 98.9% in the office, home, and park test respectively. Not a single image in the test set had a “total failure” where all five major personal identifiers listed above were exposed. The research also included a sliding privacy scale that lets users decide the amount of identifiable information they are comfortable with sharing in different contexts. That means a robot vacuum cleaner could theoretically still “see” its owner’s face in a relatively open area like the kitchen, but not in a more sensitive part of the house like the bathroom or bedroom.

“We want to give people control over their private information and who has access to it,” Sample added.

Privacy-preserving tech could make in-home monitoring less worrisome

A camera capable of automatically detecting and removing identifiable information could provide an added layer of peace-of-mind for homeowners debating which in-home security cameras or camera-equipped robots to buy. It could also give device manufacturers an opportunity to widen their audience and reach a less-served market of privacy-conscious homeowners. Higher levels of trust and comfort with cameras could also increase their effectiveness as medical monitoring devices for older seniors. Looking to the future, these cameras could also feasibly allow an emerging fleet of camera-equipped, bipedal, humanoid robots more freedom to work in manufacturing facilities or inside homes and healthcare facilities with less risk of sensitive data leakage.

“Cameras provide rich information to monitor health,” University of Michigan doctoral student in computer science and engineering Yasha Iravantchi said in a statement. “It could help track exercise habits and other activities of daily living, or call for help when an elderly person falls.”

In theory, autonomous vehicle companies, drone manufacturers, and public safety companies could use PrivacyLens to prevent their devices from inadvertently sucking up large swaths of personally identifiable data captured in public settings. That added layer of protection would make these companies less of an immediate target for hackers, data brokers, or law enforcement seeking information without a warrant.

At the same, expecting traditional security camera firms to ever implement PrivacyLens or options like it is unlikely. Businesses ranging from sports teams to healthcare facilities specifically rely on the identifying capabilities of security cameras–whether we like it or not.

Discussion about this post